Groq, the startup that could bring Nvidia to its knees

Groq is the newcomer on the field that is talking about it. It is a start-up that ventures to challenge Nvidia, the giant of graphics processors and artificial intelligence. Groq doesn’t just imagine; They are doing this with their brand new LPU (Language Processing Unit), which promises to speed up responses to language models like ChatGPT.

Groq, a little-known start-up to the general public, could pose serious challenges to Nvidia, the undisputed leader in graphics processing and AI technology. In any case, this is what we can read in the media and on X (Twitter).

But who is Groq?

context

Groq’s observation is that the computers and processors we currently use are designed to process many tasks at once. They have become super complex with many parts working together. Getting all this to work quickly and well, especially for an application like artificial intelligence that learns and makes decisions quickly (what we call inference), is a real headache. Predictions, in particular, require answers to come quickly and without consuming too much energy.

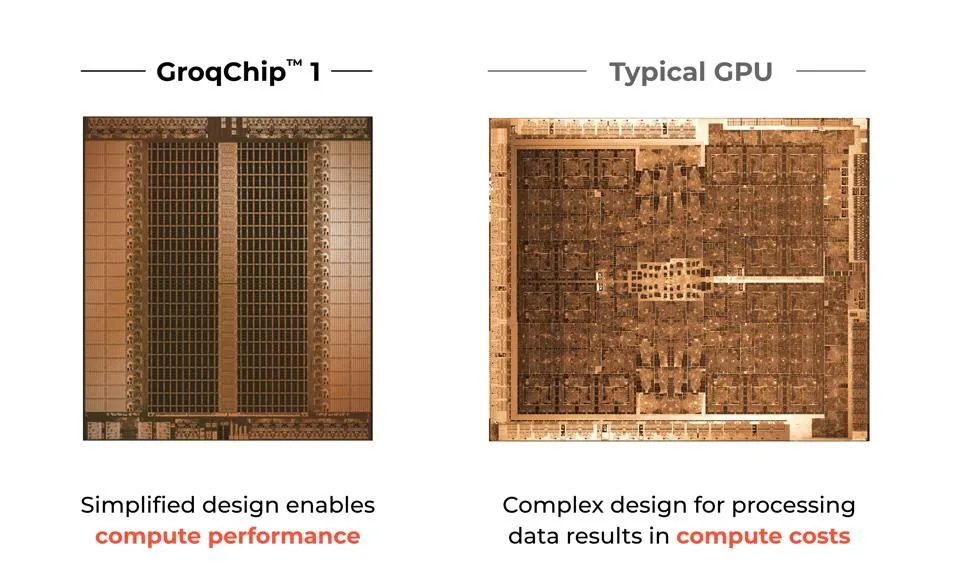

To try to manage all this, processor designers added even more components and functions to the chips, making them more complex. But it didn’t really help for certain specific tasks, like AI related ones, because these additions didn’t make things faster for those uses.

Graphics processing units (GPUs) are a partial solution because they can perform many calculations in parallel, which is great for images or video for example. But they also reach their limits, especially when it comes to processing data quickly without waiting.

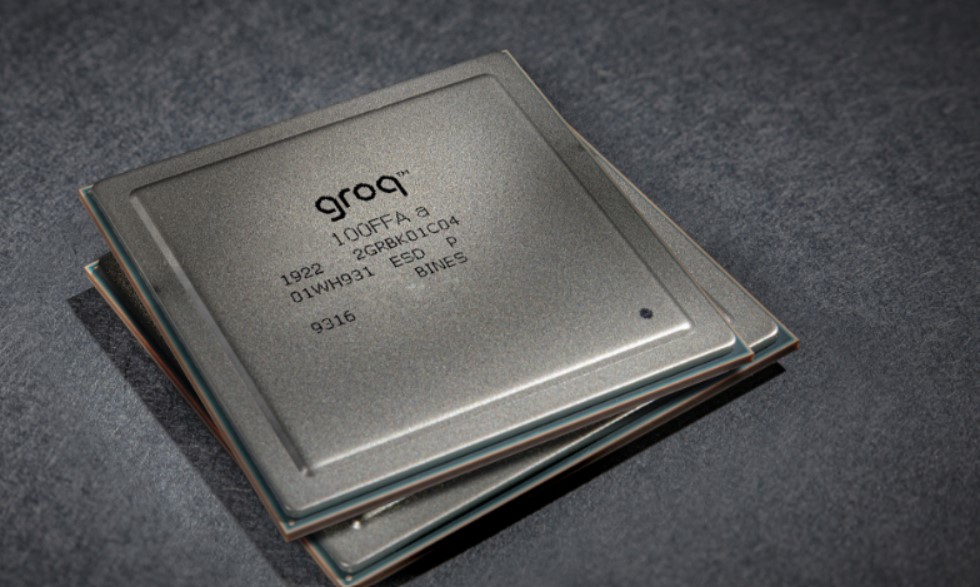

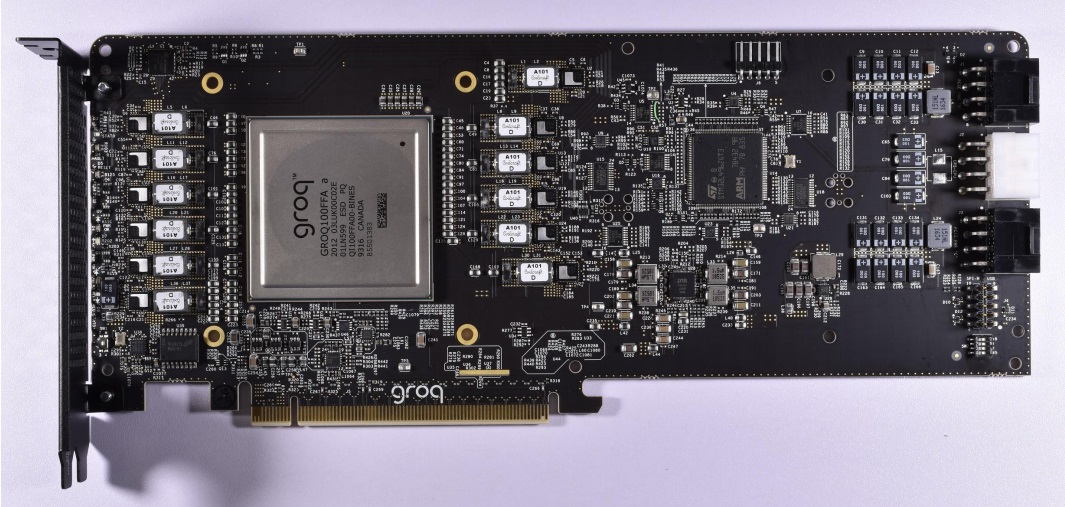

So Groq came up with something different. They created a type of chip called a tensor streaming processor (TSP).

Groq’s LPU (Language Processing Unit)

With the development of its LPU (Language Processing Unit), Groq promises to implement AI models, including language models like ChatGPT, ten times faster than current GPU-based solutions. Even better, the price-performance ratio will be 100 times better than Nvidia. Enough to bring Nvidia to its knees, which has been on cloud nine for several months, with the American company now worth as much as Meta and Amazon.

The key to this advancement lies in the unique architecture of the GroqChip, a chip specifically designed to optimize AI prediction tasks.

Unlike traditional GPUs that rely on high-bandwidth memory (HBM) for data processing, Groq’s LPUs use SRAM nearly twenty times faster. This approach will significantly reduce energy consumption and improve data processing efficiency, especially for prediction models that require less data than model training processes.

The GroqChip also differs from current GPUs in its processing approach based on a temporal instruction set, which eliminates the need to repeatedly reload data from memory. This technique not only circumvents the limitations imposed by the shortage of HBM, but also reduces production costs.

GroqChip’s effectiveness isn’t just measured in terms of speed. Compared to Nvidia H100 pro chips, GroqChip’s price/performance ratio is estimated to be a hundred times higher. What makes the difference is their approach to sequential processing, which is suitable for natural language and other data that follows a sequence.

This performance will be the result of a design that favors specialization in processing language models (LLM), taking direct inspiration from Google’s Tensor Processing Units (TPU). It must be said that behind Groq is Jonathan Ross, the startup’s CEO and founder. It is the creator of Tensor, the TPU chip behind Google’s AI.

Groq’s approach, which favors slowness, width and low power consumption, contrasts with Nvidia, which is oriented towards faster execution of matrix calculations and more efficient management of main memory. This fundamental difference is reinforced by improvements in energy efficiency and the move to more advanced manufacturing processes. For the moment, Groq’s chips are etched in 14 nm, but a move to 4 nm is already being considered.

In short, they will be a game changer for LLM, with speed and efficiency that leaves the competition far behind. Obviously, this also suggests that reliance on Nvidia GPUs could be drastically reduced.

Don’t bury Nvidia too quickly

We shouldn’t bury Nvidia too quickly. The American company continues to innovate in the field of GPUs and beyond. Remember that the unmatched performance of GPUs for training is a key competitive advantage.

Additionally, Nvidia has built a robust software ecosystem around CUDA and associated tools, making AI applications on its GPUs more accessible and efficient. This rich ecosystem is difficult to compete with and creates a strong dependence of researchers and developers on Nvidia products.

This explains why Nvidia has established numerous partnerships with cloud companies, computer manufacturers and research institutes, ensuring widespread adoption of its GPUs for AI training and prediction.

In short, to continue.

Want to join a community of enthusiasts? Welcome to our Discord, a place of mutual help and passion around tech.