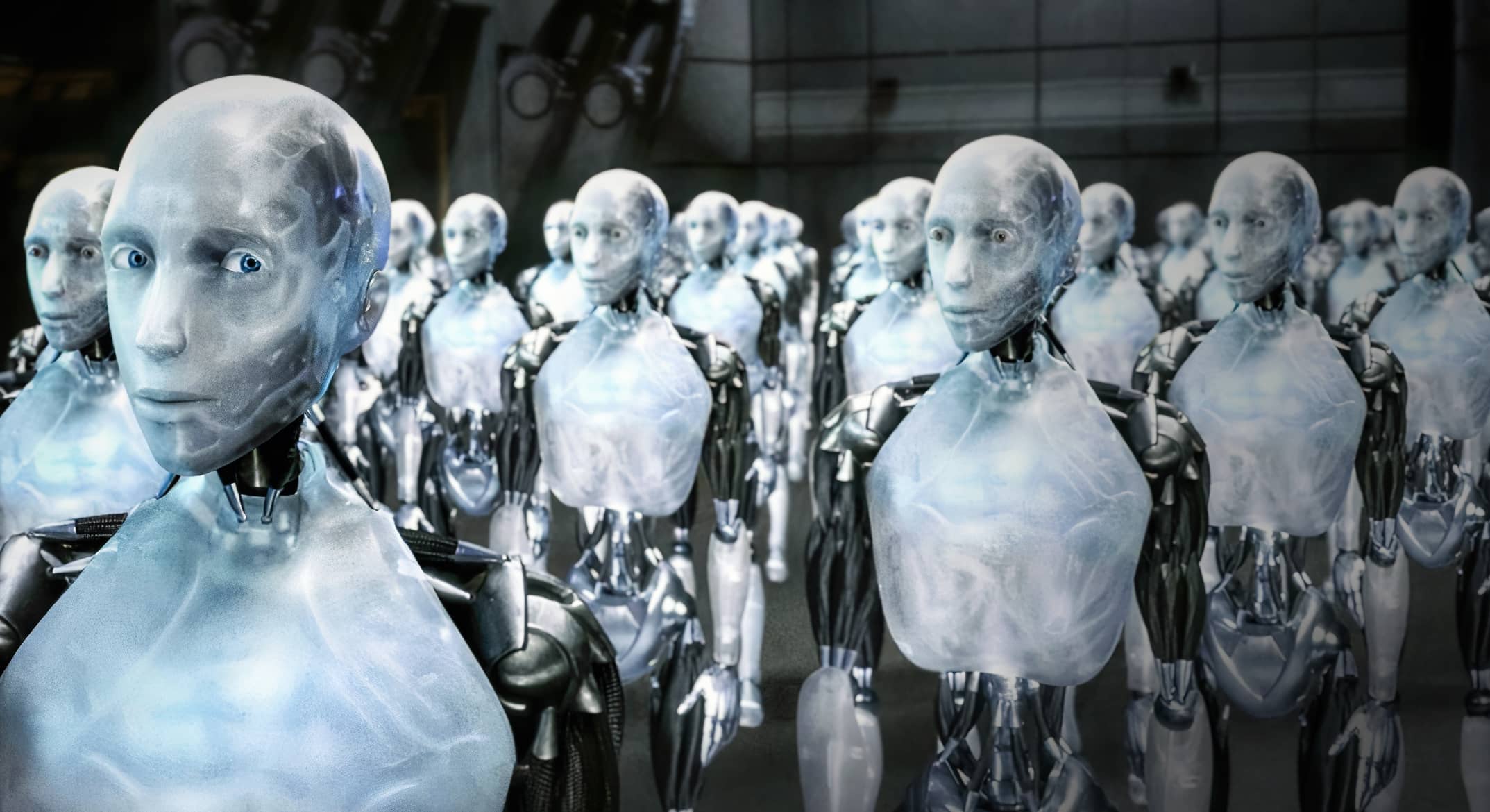

Experts say AI could wipe out humanity within a few years

⇧ (video) You may also like this partner content

” I think the time we have left to live is more like 5 years than 50 years.An AI expert says, expressing concern about the potentially damaging consequences of artificial intelligence on our future. However, while some anticipate the end of humanity through this technology, there is also clear optimism among others, who envision a future where AI will positively and radically transform our daily lives.

The goals of technology companies specializing in AI converge on a single point: achieving AGI (artificial general intelligence), a system that is theoretically able to perform any intellectual task that a human can perform. From this point forward, we will enter the realm of superintelligent AI, an entity that will surpass human intelligence. This is a level that many experts fear. Among them, Eliezer Yudkowsky – a researcher at the Machine Intelligence Research Institute, a non-profit organization – warns about the rapid evolution of AI.

In a recent interview with The Guardian, it expresses a bleak vision of the future, where AI represents an existential threat to our species. These apocalyptic predictions are not new to AI discourse. However, as technology advances, they become increasingly expressive (with great progress).

Towards a superintelligent AI?

For Eliezer Yudkovsky, the development of superintelligent AI is no longer a distant goal. He believes that this technology will become a concrete threat to humanity in a few years. ”

I think the time we have left to live is more like 5 years than 50 years.», he declares The Guardianbefore adding: “ThatMay be 2 years or 10 years“

According to Yudkowsky, a superintelligent AI can act unpredictably and independently, with abilities so great that humans cannot contain or slow it down. Fearing the worst case scenario, he envisions this technology as a highly advanced entity that operates at a scale and speed of thought that exceeds any human agency.

“An extraterrestrial civilization that thinks a thousand times faster than us», he explains to explain his words. In his scenario, superintelligent systems would thus be distributed over a vast network of devices. This disruption will greatly increase the difficulty of controlling or neutralizing them, as there will be no central “core” or single weak point for the target. The result, according to him, will be a view of the end of humanity, comparable to the films “Terminator” and “Matrix”.

See also

(Very) different opinions

Opinions on the impact of artificial intelligence vary widely among researchers, business executives, and analysts. Yudkowsky, with his extreme predictions, is often classified as a “techno-pessimist”, to distinguish him from the “neo-Luddites”. The latter are, in a way, modern opponents of certain technologies and focus on the immediate consequences of technological innovation.

According to Edward Ongweso Jr., also interviewed by the author and broadcasterThe Guardian, neo-Luddites argue for a careful evaluation of each new technology, according to some important criteria. Members of this group of individuals are more concerned about the effects of automation and surveillance on employment and working conditions than the potential apocalypse.

They generally believe that AI risks promoting violations of workers’ privacy and dignity. There are also “techno-skeptics” who envision a future where AI is accessible to everyone and facilitates many tasks through automation. This diversity of views reflects different worldviews and highlights the importance of ongoing dialogue about how society chooses to adopt and regulate technology.